The Rise of Deepfakes: How to Spot and Prevent Them

GeokHub

Deepfakes have rapidly moved from internet novelties to serious digital threats. Powered by AI, these ultra-realistic fake videos, audio clips, and images are being used to spread misinformation, commit fraud, and even influence elections. As deepfake technology becomes more accessible and convincing in 2025, learning to identify and guard against it is more important than ever.

What Are Deepfakes?

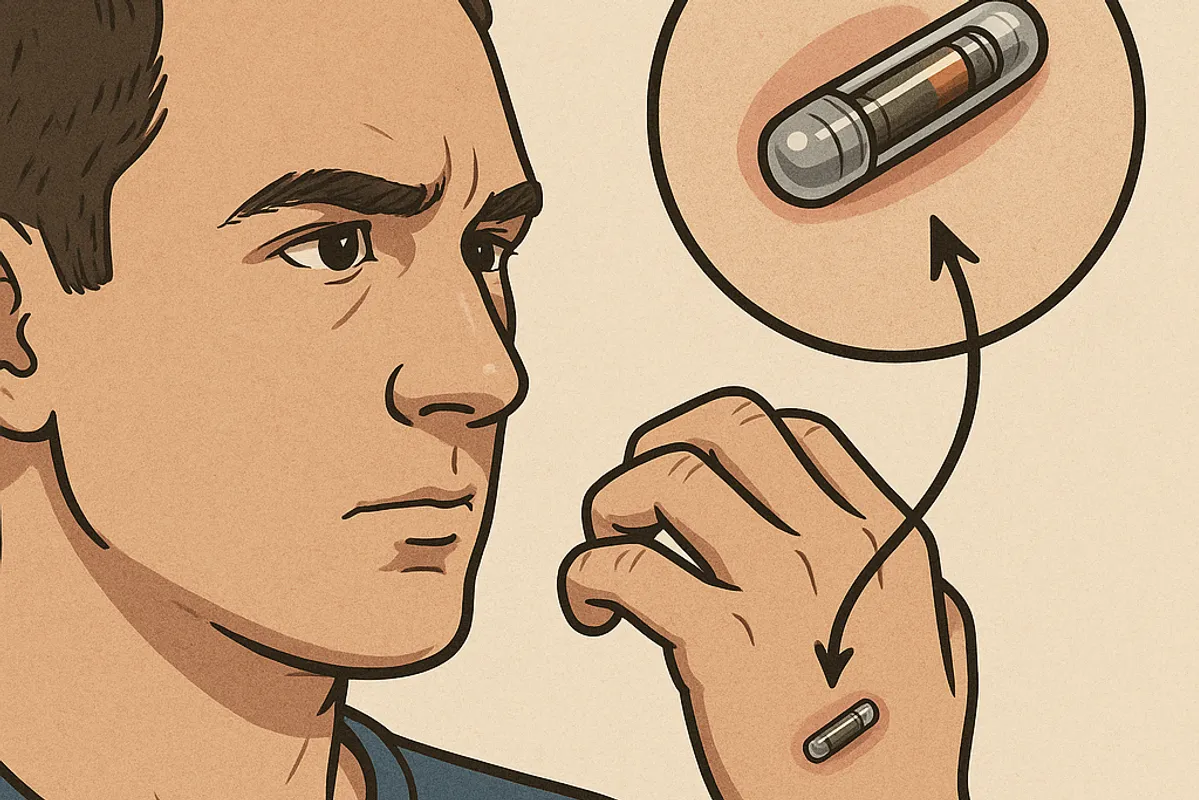

Deepfakes are synthetic media—videos, audio, or images—created using artificial intelligence, especially deep learning algorithms. They convincingly mimic real people’s appearances and voices, often making it difficult to distinguish them from genuine content.

Why Deepfakes Are Dangerous

- Disinformation: Used to create fake political speeches, interviews, or news segments.

- Fraud & Scams: Mimicking CEOs or family members to trick employees or individuals into transferring money.

- Reputation Damage: Used in revenge campaigns, fake adult content, or doctored interviews.

- Erosion of Trust: Makes it hard to trust what we see or hear online, undermining truth itself.

How to Spot a Deepfake

Even as technology advances, many deepfakes still show subtle signs. Look for:

- Unnatural facial movements: Blinking too slowly or too frequently, odd mouth movements, and stiff expressions.

- Inconsistent lighting and shadows: Deepfake overlays often fail to match the lighting of the original footage.

- Audio mismatches: Voice tone might feel off, robotic, or not match the person’s usual way of speaking.

- Flickering around the face or edges: Edges may blur or “ghost” slightly during motion.

- Strange eye reflections: Lack of reflection or odd glinting in the eyes can be a giveaway.

Tools to Detect Deepfakes

- AI detection software: Tools like Microsoft Video Authenticator, Deepware Scanner, and Deeptrace can analyze videos for authenticity.

- Reverse image search: Helps trace the original source of images or frames.

- Metadata analysis: Examining a file’s metadata can reveal inconsistencies about its origin.

How to Protect Yourself and Others

- Stay skeptical: Question videos and audio that seem too shocking or emotionally charged.

- Verify sources: Trust content from verified or reputable outlets. Be cautious about WhatsApp forwards and social media clips.

- Educate your network: Help others, especially older or less tech-savvy individuals, recognize deepfakes.

- Use authentication tools: Blockchain and watermarking technologies are being developed to verify the authenticity of digital content.

- Support legislation: Push for and follow emerging laws that regulate the malicious use of deepfakes.

The Future of Deepfakes

As AI becomes more powerful, deepfakes will become harder to detect. At the same time, researchers are building AI-powered detection systems and advocating for content authenticity protocols. Combating deepfakes will be a constant game of catch-up—but awareness is the first line of defense.

Final Thoughts

Deepfakes represent one of the most pressing digital threats of our time. By staying informed, developing digital literacy, and using the right tools, individuals and organizations can defend against manipulation and maintain trust in a world where seeing is no longer believing.